This fall an unusual four-month experiment pitted generative AI against conventional digital tools in eight separate creative tasks. Fifty students wrote, coded, designed, modeled, and recorded creations with and without AI, then judged the results.Undergrads in UMaine’s 2023 Introduction to New Media course, taught by Jon Ippolito assisted by Sean Lopez, repeated the same eight tasks with pre- and post-AI approaches, from writing essays to coding game avatars to creating 2d, 3d, and time-based media. Computer scientists Greg Nelson and Troy Schotter are analyzing the results and plan to share their analysis with the broader educational community.

Each genre of digital media has different technical and ethical nuances, so the experience was revealing (and rather overwhelming). Greg and Troy are just starting to dig into the trove of data our collaboration has conjured, but we can already discern some trends.

Each genre of digital media has different technical and ethical nuances, so the experience was revealing (and rather overwhelming). Greg and Troy are just starting to dig into the trove of data our collaboration has conjured, but we can already discern some trends.

In writing essays, for example, students were impressed with GPT-4’s essay structure, examples, and grammatical prose but felt citations were often unreliable and the style sometimes overly elaborate or formal. One described the AI as a student trying really hard to sound smart. Having been coached on incremental prompting to improve results, another student remarked that it took about the same amount of time to coax a good enough essay from the chatbot as to write his original essay.

The audiovisual assignments were especially revealing of generative AI best practices and limitations. Once coached with incremental prompting techniques, most students were able to draw a convincing if simplistic animal from P5js code within a half-dozen iterations—and with a lot less hand-holding than they received during the lesson on coding by hand. Likewise, students creating illustrated stories were able to deviate from cliches, but only after repeated prompting.

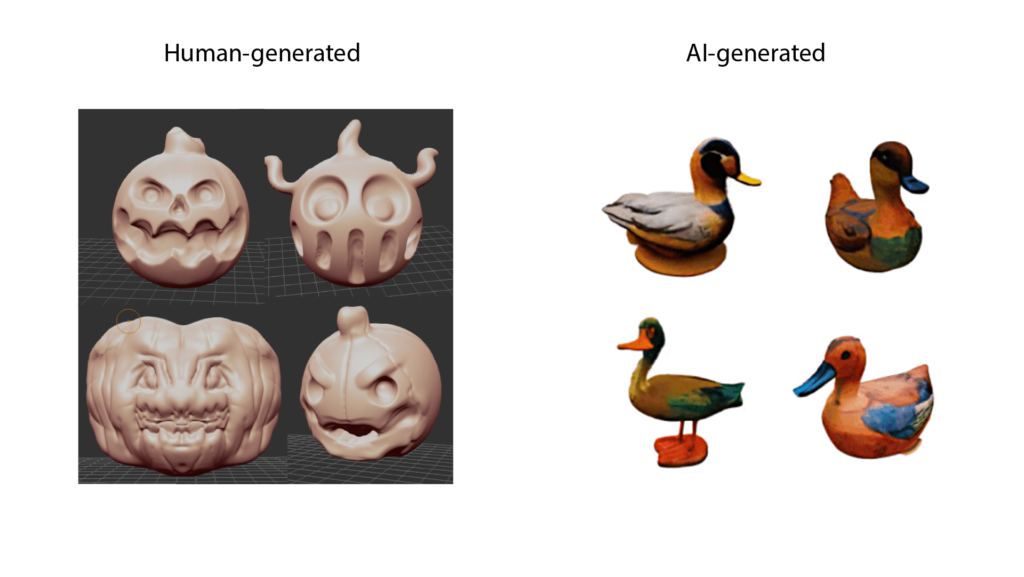

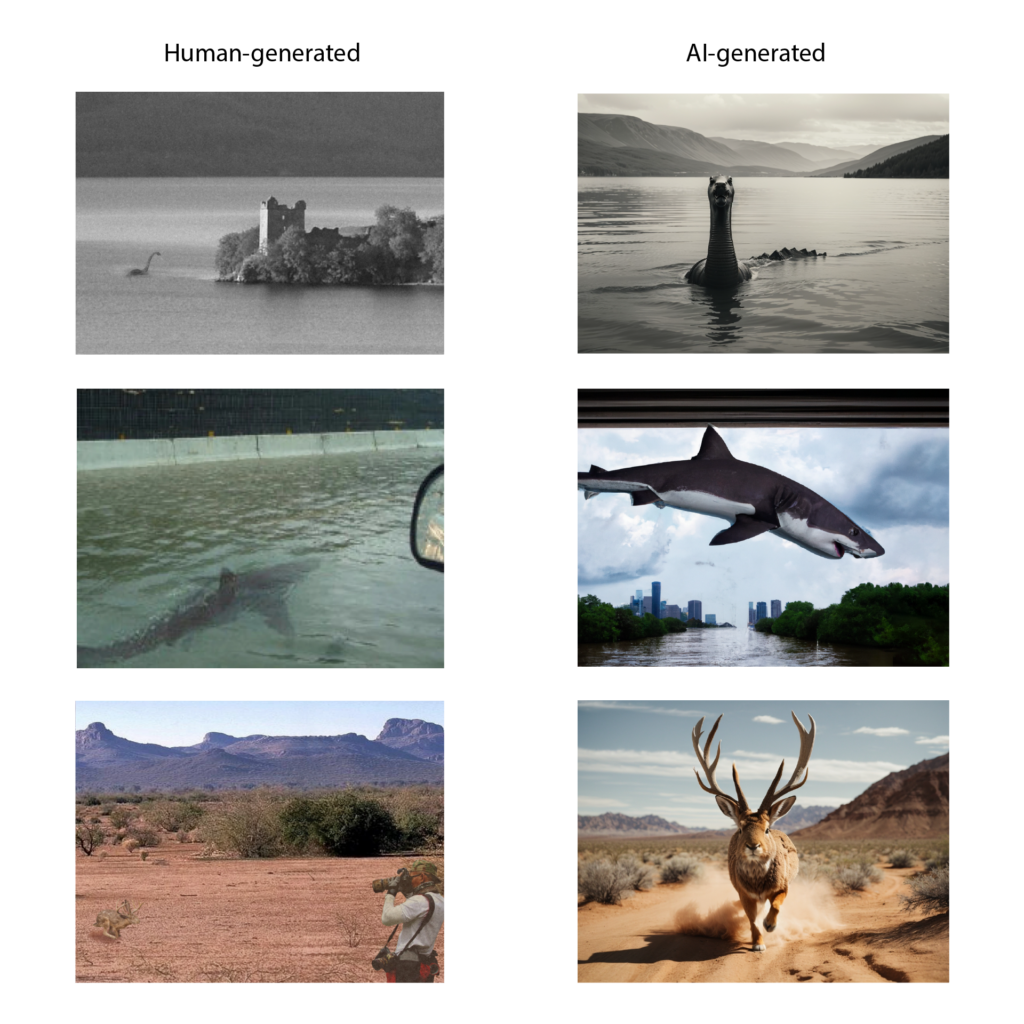

Students struggled to produce realistic AI-generated images and audio. Photoshop composites based on Juliana Castro’s assignment to illustrate a hoax were grainier and overall more convincing than AI fakery, which often produced hyper-realistic, frontal compositions that were too formulaic to be credible. AI soundscapes of meadows or streets typically came off as clumsy stereotypes compared to the subtle audio textures in actual recordings. Students who layered multiple AI-generated tracks achieved better results, as in Jazzmyne Haines’ sound sample of a “Drop Bear” koala attack.

One of the most provocative assignments asked GPT-4 to critique and grade a past assignment from this course and compare the results to the actual feedback from human graduate students (with their permission). The assignment also invited students to generate alternative feedback and grades for assignments outside of this course.

On average students reported comparable levels of accurate and constructive criticism for human and AI-generated critiques, with TAs giving more specific recommendations. GPT-4 seemed a harsher grader, with several students hypothesizing that the AI did not correctly grasp the rubric or level of this first-year course. Although the general tone from both was encouraging, some students judged the TA’s more inspiring because they knew it came from a human with emotions.

The study was conducted in conjunction with the Learning With AI initiative, a multi-institutional effort to crowdsource strategies and resources for educators, learners, and creators. Because of the course’s experimental nature, students were graded according to participation only; requirements included detailed surveys about their experiences as well as live interviews with a select number of volunteers. Troy wrote a bespoke tool for students to access GPT-4 that enables their inputs and outputs to be captured, although all data will subsequently be anonymized.

Even after the lessons, students seemed to feel more confident with a traditional approach than with AI. Most felt low-to-moderate confidence about achieving their writing goals with AI, and even less confidence about how to use AI ethically. We hope with future research to figure out whether this insecurity is due to inexperience or endemic to AI tool use.

Look for a comprehensive analysis to be published in the coming year, or learn more about the Learning With AI toolkit, which is already available.