When a tech reporter for the NY Times outsourced her decisions for a week to ChatGPT, she complained that “AI made me basic.” But it turns out the math behind generative AI can lead to results that are blandly average or wildly inaccurate.

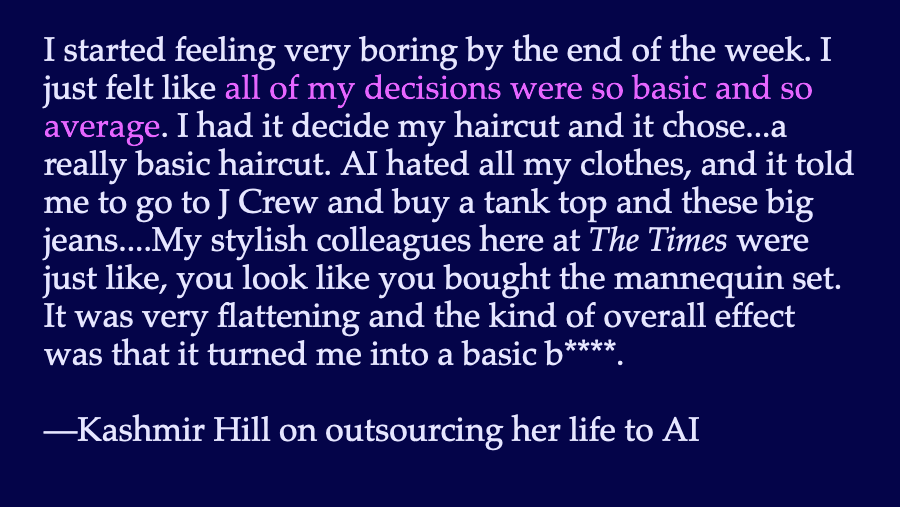

Kashmir Hill’s idea to let generative AI dictate everything from her haircut to her relationship may seem like a risky gambit, but it turned out to be a lot more tame than expected. AI’s commands were sensible enough not to damage her relationship (“let your husband play golf”) but also so average that her wardrobe ended up looking like a J Crew catalog.

I’ve written before about how averages are at the heart of generative AI. In his interview with Hill, Hard Fork co-host Casey Newton takes a stab at the pros and cons of averaging:

“These systems often steer you toward the the median or the average outcome, right? You write that they they risk flattening us out. At the same time, there are a lot of tasks I’m really bad at, and if I could be brought up to the median easily like that would be great for me.”

Unfortunately for Casey, large language models do not exactly steer toward the median; they steer toward the mean. In statistical terms, the median is a point at which half the data is on one side and half on the other. The median number of children in a US families is 2, so there are roughly equal numbers of households with 1 or no kids and households with 3 or more.

Unfortunately for Casey, large language models do not exactly steer toward the median; they steer toward the mean. In statistical terms, the median is a point at which half the data is on one side and half on the other. The median number of children in a US families is 2, so there are roughly equal numbers of households with 1 or no kids and households with 3 or more.

The mean, by contrast, is a weighted sum of the values of all data points divided by the number of points. The mean number of children in US households as of 2023 was 1.94. Obviously no family actually has that exact number of kids.

The median is always guaranteed to be a valid point from the original training data, but the mean is a feat of mathematical imagination. The mean could be in the original data, but it could also be an invention that lies in the no-man’s land between widely separated real data. To find the mean is to find the center of gravity, but there are some things—like horseshoes—whose center of gravity is not actually part of the thing itself.

When there are plenty of data points near the mean, large language models return sensible, if sometimes boring, results. There are thousands of web pages offering button-down shirts and chino pants, so asking AI to for fashion advice is likely to return these “basic” styles.

For factual requests, this is an advantage when there’s plenty of data near the mean; we want AI to tell us the capital of France is Paris even if that’s the “average” answer. But chatbots produce incorrect results when data near the mean is sparse. Google’s AI Overviews recommended “at least one” when asked how many rocks a person should eat each day, because the only web page remotely near that ridiculous query was a satiric article from the Onion.

On the other hand, for creative requests, the ability to return points not originally in the dataset is a strength of AI. I asked GPT-4o to recommend preppie clothing styles that would work for large families, and it suggested adding more pockets to chinos and giving kids brightly colored t-shirts to make them more visible in crowds. And we’ve all seen examples like turning the US Tax Code into a Homeric poem or Choose-Your-Own-Adventure Novel.

(Hard Fork co-host Kevin Roose asks whether there could be a system setting that could return less average results. There already is—a model’s “temperature”—but it’s hidden from view in typical chatbot interfaces.)

Apart from the other ethical issues surrounding AI, understanding the difference between median and mean is key to knowing when AI is a benefit or bane.