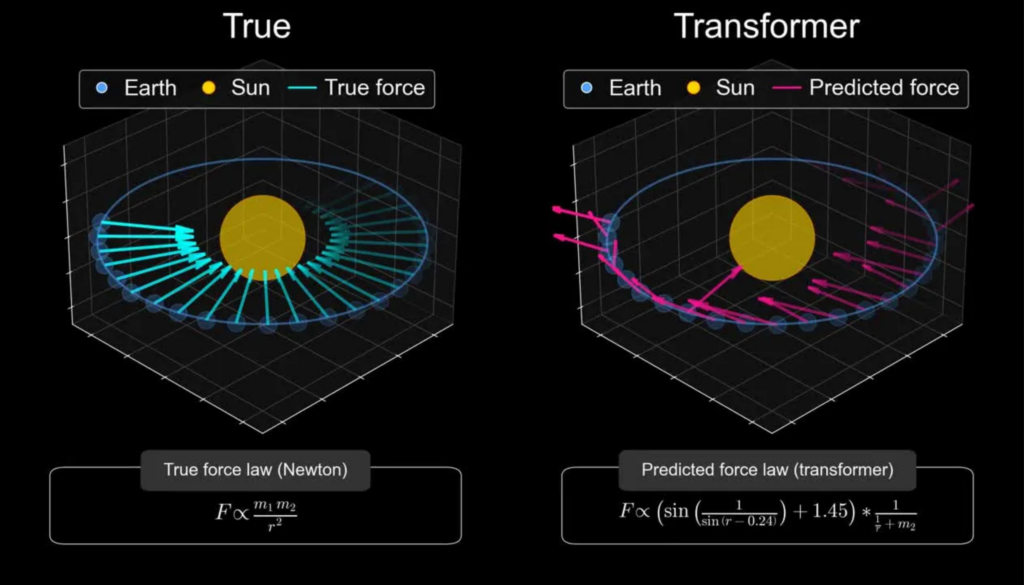

Of all the AI buzzwords out there, the word “model” would seem free of hyperbole compared to “superintelligent” or the “singularity.” Yet this innocuous-seeming word can mean two contradictory things, and AI companies are deliberately muddling the line between them. A recent Harvard/MIT study of simulating planetary orbits illustrates the contrast between what scientists consider a “world model” and what AI enthusiasts think transformers are generating.

When these researchers trained it to reproduce planetary orbits, AI jury-rigged an arbitrary set of predictions that happened to match the data. This is very different from boiling the laws of motion down to a few fundamental principles, which is required for a scientific model. More disappointing still is the fact that each transformer generated a different wacky force law depending on the data sample. Both state-of-the-art LLMs and a custom transformer trained specifically on orbital trajectories demonstrated the same incoherence.

A scientific model is something very different than a stochastic output simulator. It’s predictive, sure, but those predictions derive from a handful of generalizable principles that teach us something fundamental about the nature of the system, whether a hydrogen atom or a galaxy.

As an astrophysics major in college, I heard historian of science Owen Gingrich say something that blew my mind at the time. Copernicus’ original Sun-centered model of our solar system met resistance from scientists not just because it displaced humanity from the center of the cosmos, but also because it didn’t fit the facts as well as Earth-centered models.

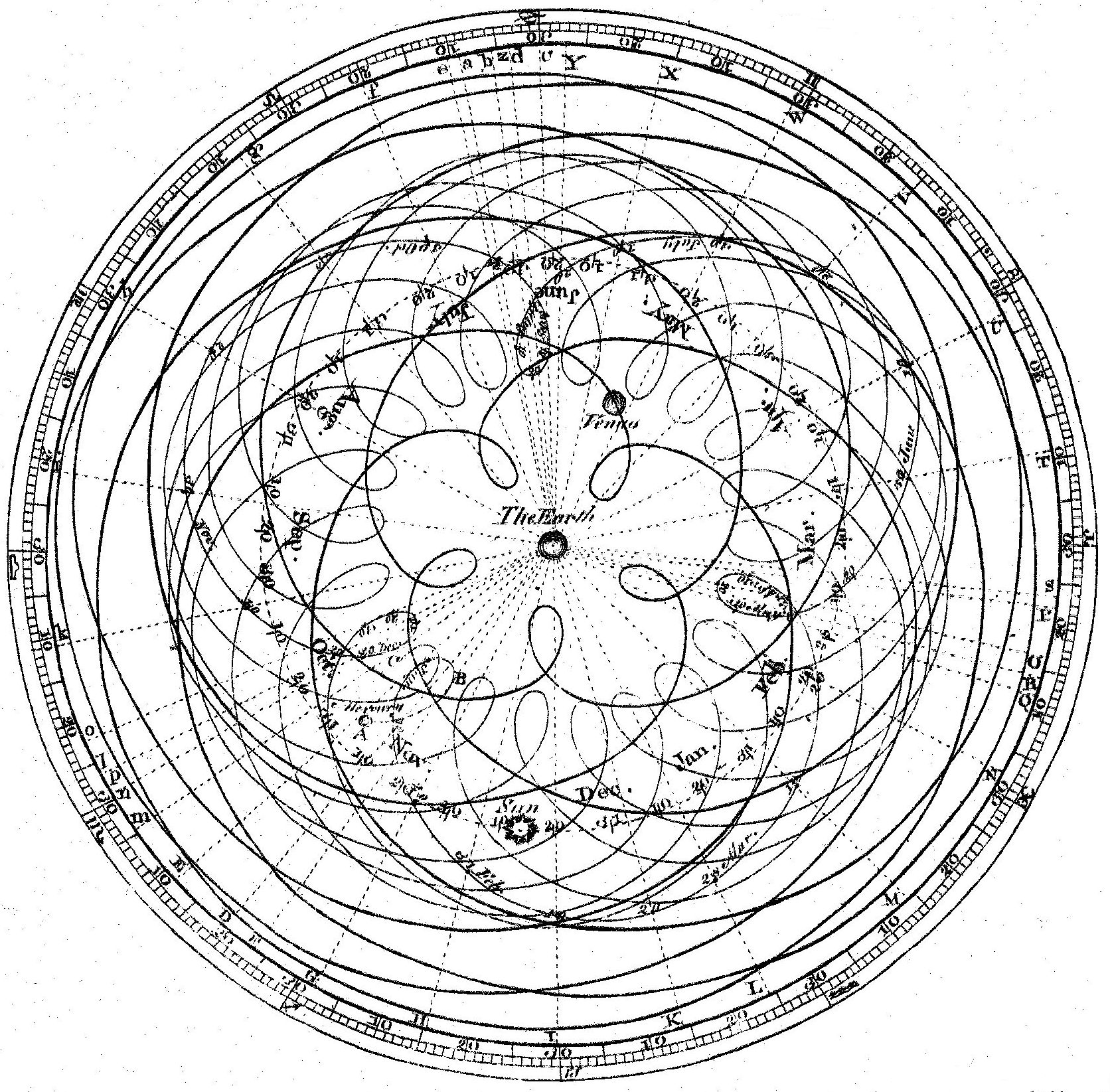

Ptolemy and his descendants had gradually refined the geocentric model over 1400 years to match astronomical trajectories plotted by stargazers. Sure, it was hard to reconcile the movement of planets across the sky with orbits around the Earth, but not impossible. Ptolemy’s followers invented displaced orbits (“equants”) and mini-orbits (“epicycles”) for each planet; adding enough of these recursively made it possible to fit the data with increasing precision. The arbitrary complexity of these models, some with as many as 80 epicycles, is reminiscent of the complexity of neural networks.

Ptolemy and his descendants had gradually refined the geocentric model over 1400 years to match astronomical trajectories plotted by stargazers. Sure, it was hard to reconcile the movement of planets across the sky with orbits around the Earth, but not impossible. Ptolemy’s followers invented displaced orbits (“equants”) and mini-orbits (“epicycles”) for each planet; adding enough of these recursively made it possible to fit the data with increasing precision. The arbitrary complexity of these models, some with as many as 80 epicycles, is reminiscent of the complexity of neural networks.

According to Gingrich, Copernicus’s real revolution wasn’t finding a better fit to the data but finding a simple solution free of Ptolemaic curlicues, one that hinted at underlying laws generalizable to other scenarios. Copernicus’ model inspired Kepler to derive mathematical equations of planetary motion, which in turn became the inspiration for Newton to pen the laws that underpin all of classical physics. Copernicus’ aesthetic minimalism led not just to prediction, but to explanation and understanding.

Gingrich argued that a key requirement for such universal applications is mathematical brevity. A common justification for this prerequisite is that the wonders of nature, at least those we can comprehend, spin out from a few inherently simple rules—what my former classmate Brian Greene calls the “elegant universe.” All of classical electricity and magnetism, for example, can be boiled down to just two equations.

Gingrich argued that a key requirement for such universal applications is mathematical brevity. A common justification for this prerequisite is that the wonders of nature, at least those we can comprehend, spin out from a few inherently simple rules—what my former classmate Brian Greene calls the “elegant universe.” All of classical electricity and magnetism, for example, can be boiled down to just two equations.

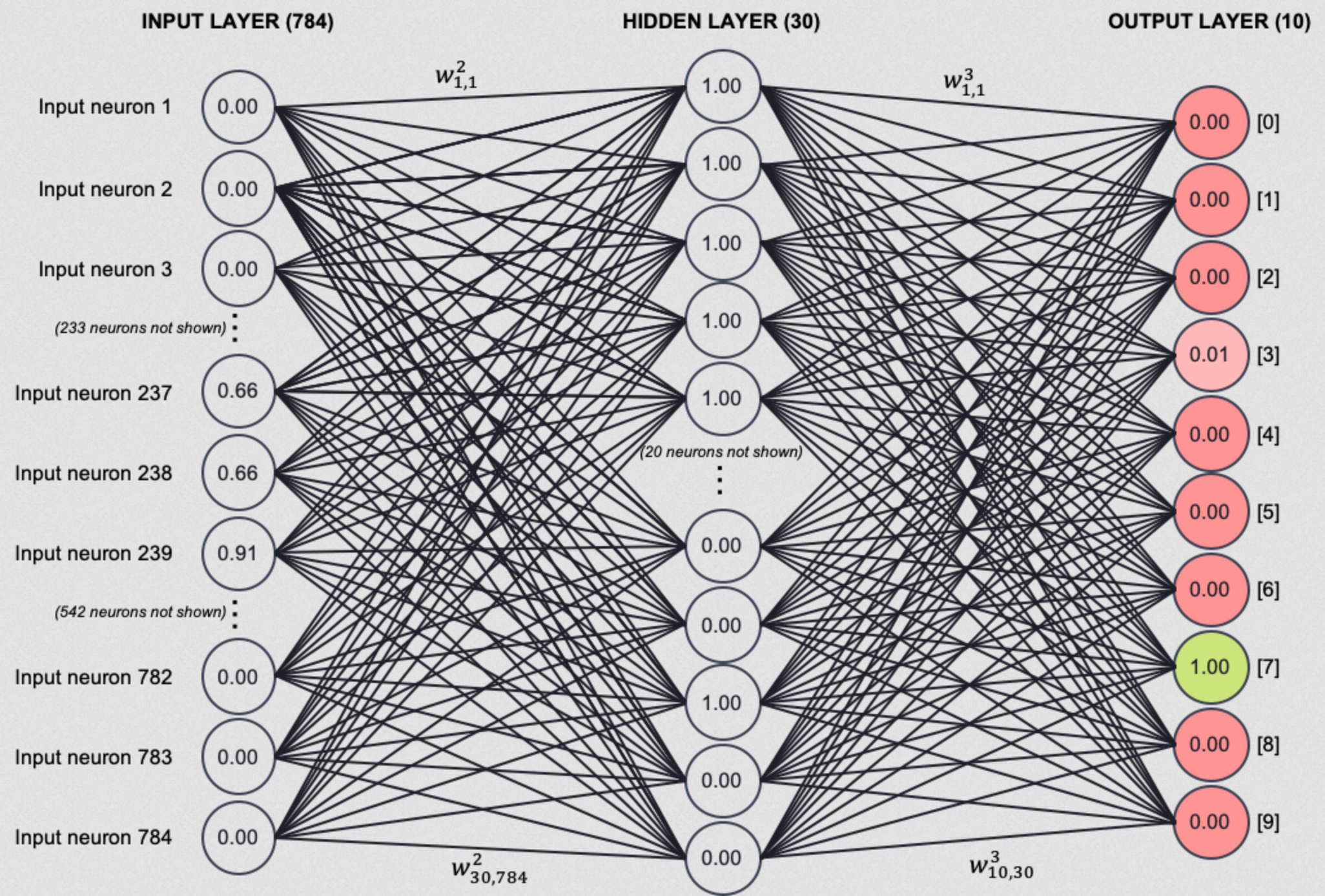

Today many heralds of artificial general intelligence are making the same mistake as Ptolemy and his followers. Because transformers can simulate a predictable world, we presume they are also generating models of it. But this confuses a set of universal axioms with a mechanism recursively refined to match data. Large language “models” do have many useful features, but compared to the mathematical models devised by human scientists, all transformers are overfitted to their data. The next Copernicus will not be an AI, though it may be a scientist working with one.

Top: The coherent force vectors proposed by the scientific model versus the arbitrary vectors proposed by transformers. Top right: James Ferguson, Representation of the apparent motion of the Sun, Mercury, and Venus from the Earth (1771). Bottom right: a three-layer neural network diagram (via Duif).